This article is based on a true story. The names of the company and people involved were changed to protect the innocent 🙂 .

A few weeks ago, we were contacted by a pretty big e-commerce company. We can’t really share their name but, for the purpose of this story, let’s call them “KubeCorp Inc”. They reached out to us following an edge-case incident they had, which resulted in severe downtime.

This incident was spotted by the NOC team that detected unusual CPU and memory consumption but couldn’t pinpoint what change in the system caused it to happen.

I thought it would be an interesting story to share, so – without further ado – here is the story of this incident.

Rick In A Pickle

Our story starts late at night when, around 1 am, an engineer on the KubeCorp NOC team (code name Rick) got bombarded by an unusual volume of OpsGenie alerts. The nodes’ CPU and memory usage were both on the rise and the service response time was so long that the application became unresponsive. He also found the number of nodes to be peculiar, but he wasn’t if 200 nodes is enough reason to be alarmed.

Rick hopped to the system’s dashboard on Datadog and noticed that the affected service had 4,000 pods, and its metrics were on the rise in the last few hours. This was a pretty steep increase from the usual 30 pods or so, but Rick wasn’t familiar enough with the system to realize just how highly unusual this really was. He did, however, have a feeling that 4,000 might be a few too many…

Following this hunch, Rick attempted to delete some nodes manually to remediate the issue but this didn’t cut it, as the service was unresponsive. Adding to the confusion, when Rick went into Kubectl to pull data for the service, it appeared to be healthy and ready as if nothing happened. This was the moment Rick realized he was in a real pickle.

The cluster had an HPA installed to automatically increase the number of pods, and an auto-scaler to increase the number of nodes in case of pending pods. But the questions remained: What could have caused the system to create 190 additional nodes? What went wrong with the service, and why was it consuming so many resources?

The Long Road to Solution

Rick checked the HPA and auto-scaler configuration and found that all the values are maxed and some metrics are rising. The HPA configuration was there for a long time and the maximum threshold for pods was high enough to make sure no one will have to wake up in the middle of the night to scale pods. So he and the other NOC team members on call decided to scan through all of the recent code changes on GitHub.

Using a mono repo in a big company with engineers from all over the world, pushing changes through automated CI/CD pipelines means finding a single commit can be a real pain in the neck. After over an hour of going through code commits, they found a suspicious change with the comment:

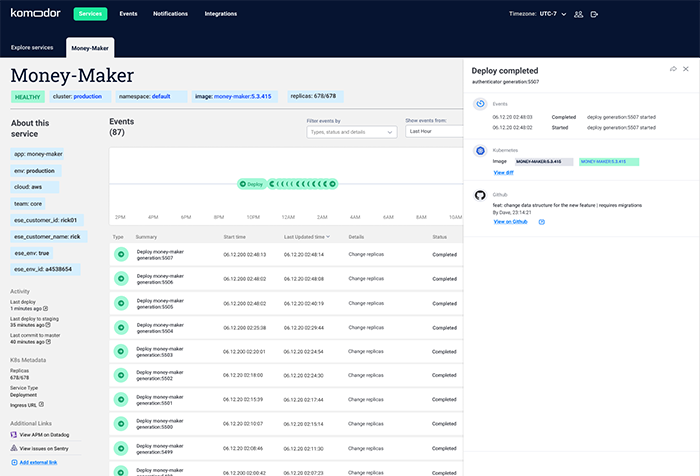

feat: change data structure for the new feature|requires migrations

.

The commit also included many changes to their DB scheme – way above the reasonable amount you would expect to see. They realized that this change caused the CPU to suffocate over time, and the HPA in response started spawning up more and more pods based on the CPU metrics

The change was pushed by one of Rick’s fellow developers, who was oblivious to the ramifications down the line. In a Kubernetes version of the “butterfly effect”, he just made a little splash in the ocean of production code, and on the other end of the system, the on-call engineers were now facing a tsunami.

Unfortunately, they had to wake up their Chief DevOps and ask for help, because the team already tried all the quick fixes in their toolbox, and they were also a bit worried about causing more harm if they kept trying.

A cup of Joe later, when the DevOps (let’s call him Terry) finally sat down to troubleshoot, he found the situation to be much worse than expected. In the end, he ended up spending the rest of his night reverting the DB scheme and code to align everything and make sure all nodes and pods could scale down automatically, without impacting the system (and with a little manual push).

By the time the dust settled, the entire incident clocked 4.5 hours from the moment Rick first saw the initial OpsGenie alert.

What If They Had Komodor?

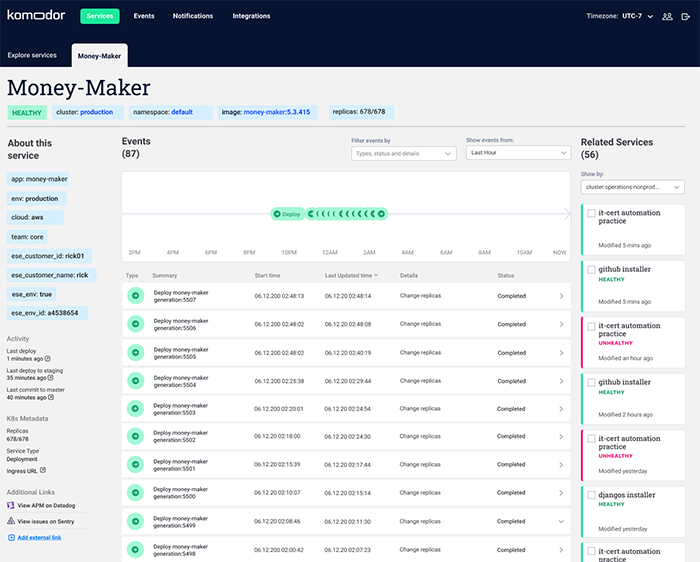

With Komodor, things could have been a lot simpler. First of all, with Komodor, Rick, the NOC team, the ops team, the developers, and DevOps could have all followed the incident on a single unified platform and been notified at the same time.

When looking at the timeline, Rick could have easily correlated the time of the alert with the time of the Git change and the auto-scaling for pods & the nodes. From there, they would have been one click away from seeing the diff in Github and realizing that it was the root cause.

Clicking on the health event would have prompted step-by-step instructions for remediation, allowing Rick to independently revert the application automatically and scale down the nodes to steer the system back to its normal and healthy state.

The entire incident end-to-end would’ve been resolved in less than 30 minutes. This was exactly what we told them on the discovery call before they decided to start their Free Trial.