What Is Rancher?

Rancher is an open-source container management platform that simplifies the deployment, scaling, and management of Kubernetes clusters. It provides a user-friendly interface, advanced features, and integrations with popular DevOps tools, making it easier for developers and administrators to manage and orchestrate containers in a Kubernetes environment.

This is part of an extensive series of guides about Kubernetes.

Kubernetes vs. Rancher: What Is the Difference?

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It is a powerful, yet complex system.

Rancher is an open-source container management platform built on top of Kubernetes. It simplifies Kubernetes cluster management, access control, and application deployment with a user-friendly interface and additional features.

Essentially, Kubernetes is the core orchestration platform, while Rancher is a management layer that enhances the user experience of working with Kubernetes. They are complementary technologies.

Learn more in our detailed guide to Kubernetes vs Rancher.

Tips from the expert

Itiel Shwartz

Co-Founder & CTO

In my experience, here are tips that can help you better manage and utilize Kubernetes Rancher:

Automate Infrastructure Provisioning

Use Infrastructure as Code (IaC) tools like Terraform to automate the provisioning of the infrastructure for your Rancher-managed Kubernetes clusters. This ensures consistency and repeatability across environments.

Enable Role-Based Access Control (RBAC)

Implement RBAC policies to control who can access and perform operations on your Kubernetes clusters. Rancher makes it easy to manage RBAC, but you should tailor the roles and permissions to fit your organization’s security requirements.

Integrate with CI/CD Pipelines

Integrate Rancher with your CI/CD pipelines to automate the deployment and management of applications. Tools like Jenkins, GitLab CI, and GitHub Actions can be configured to interact with Rancher for seamless application delivery.

Use Multi-Cluster Applications

Leverage Rancher’s multi-cluster application capabilities to deploy and manage applications across multiple Kubernetes clusters. This enhances scalability and fault tolerance for your applications.

Implement Cluster Templates

Use cluster templates in Rancher to standardize the configuration and deployment of Kubernetes clusters. This helps ensure that all clusters are configured consistently and according to best practices.

Rancher Platform Features

Infrastructure Orchestration

Infrastructure orchestration in Rancher refers to the process of automating the provisioning, management, and configuration of the underlying infrastructure that supports Kubernetes clusters.

Rancher simplifies the setup and management of Kubernetes clusters across different cloud providers and on-premises environments. It supports popular cloud platforms like AWS, Azure, Google Cloud, and VMware, as well as custom nodes and clusters, making it easier to manage and scale infrastructure resources.

Container Orchestration

Rancher integrates with Kubernetes to provide an enhanced container orchestration experience. It streamlines the deployment, scaling, and management of containerized applications, abstracting the complexities of Kubernetes through a user-friendly interface.

Rancher enables users to manage multiple Kubernetes clusters, deploy applications using Helm charts, and monitor the health of their clusters and workloads. It also simplifies the management of networking, storage, and load balancing for containers.

Application Catalog

Rancher’s Application Catalog is a repository of pre-built application templates, including Helm charts and Rancher-specific templates, which simplify the deployment of containerized applications.

Users can browse, configure, and deploy applications with just a few clicks, without needing to manually create and manage Kubernetes manifests. The catalog helps easily share applications across teams and organizations, improving collaboration and promoting best practices in application development and deployment.

Rancher Software

Rancher provides a suite of tools and services that complement the core Rancher platform to provide a comprehensive container management solution for Kubernetes environments. Some of the key software components include:

- Rancher: The primary component, Rancher is the container management platform built on top of Kubernetes. It simplifies cluster management, access control, and application deployment, providing a user-friendly interface and advanced features to streamline Kubernetes operations.

- RancherOS: A lightweight Linux distribution designed specifically for running containers. It minimizes the operating system (OS) footprint by running system services as containers, making it ideal for container-based environments.

- Longhorn: A cloud-native, distributed block storage solution for Kubernetes. Longhorn provides highly available and reliable storage for containerized applications, complete with automated backups, snapshotting, and replication capabilities.

- K3s: A lightweight Kubernetes distribution designed for edge computing, IoT, and resource-constrained environments. K3s is a fully conformant Kubernetes distribution that simplifies the deployment and management of Kubernetes clusters in scenarios where traditional Kubernetes may be too resource-intensive.

- RKE (Rancher Kubernetes Engine): An enterprise-grade Kubernetes installer and management tool. RKE simplifies the process of deploying and upgrading Kubernetes clusters by automating much of the configuration and management tasks, making it easier for teams to maintain and operate their Kubernetes infrastructure.

Related content: Read our guide to Rancher vs Openshift.

Tutorial: Setting Up a High-Availability RKE Kubernetes Cluster

For this tutorial, you will need to have the kubectl command-line tool installed. You also need to install the Rancher Kubernetes Engine (RKE).

Prerequisites

You can install RKE on Ubuntu 20.04 using the following set of commands:

curl -s https://api.github.com/repos/rancher/rke/releases/latest | grep download_url | grep amd64 | cut -d '"' -f 4 | wget -qi - chmod +x rke_linux-amd64 sudo mv rke_linux-amd64 /usr/local/bin/rke rke --version

Step 1: Create a Cluster Configuration File

The first step is to a configuration file for the Kubernetes cluster. In this example, we will call it rancher-cluster.yml. The cluster config file is useful later on when you want to install Kubernetes on a node.

Use the following script to create the config file: , Replace the IP addresses in the nodes list with the IP address or DNS names of the 3 nodes you created.

If your node has public and internal addresses, it is recommended to set the internal_address: so Kubernetes will use it for intra-cluster communication. Some services like AWS EC2 require setting the internal_address: if you want to use self-referencing security groups or firewalls.

RKE will need to connect to each node over SSH, and it will look for a private key in the default location of ~/.ssh/id_rsa. If your private key for a certain node is in a different location than the default, you will also need to configure the ssh_key_path option for that node.

nodes:

- address: 54.224.252.229

user: ubuntu

role:

- worker

- address: 54.173.222.78

user: ubuntu

role:

- worker

- address: 174.129.76.243

user: ubuntu

role:

- etcd

services:

etcd:

snapshot: true

creation: 8h

retention: 48h

ingress:

provider: nginx

options:

use-forwarded-headers: "true"

Step 2: Run the Rancher Kubernetes Engine

A few important things to check before running Rancher Kubernetes Engine:

- The Ubuntu user must have necessary permissions on hosts to run docker

- SSH key must be stored in

/root/.sshd/id_rsaand/root/.sshd/id_rsa.pub(if not explicitly defined) - Firewall rules must permit SSH connections

- RKE works with only certain Docker versions. Supported versions are 1.13.x, 17.03.x, 17.06.x, 17.09.x, 18.06.x, 18.09.x, 19.03.x, and 20.10.x.

- Ensure the non-sudo user can run Docker commands.

- Create kube_config_rancher-cluster.yml using the following command:

rke config --name kube_config_rancher-cluster.yml

To get the Rancher engine running, use the following:

rke up --config ./rancher-cluster.yml

Once the RKE is up, it should display a message saying that it has successfully built the Kubernetes cluster. There should now be a kube config file with the name “kube_config_cluster.yml” – this contains the credentials for Helm and kubectl.

Step 3: Test the Cluster

Configure the workspace to allow you to interact with the cluster via kubectl. The relevant kube config file should be located in a place that kubectl can access. This file includes the credentials required to work with the cluster.

You can put the cluster configuration file in $HOME/.kube/config. Alternatively, modify the KUBECONFIG environment variable to point to the file’s path – this enables the use of multiple clusters. To export the file:

export KUBECONFIG=$(pwd)/kube_config_cluster.yml

pwd is an environment variable indicating the directory or path where kube_config_cluster.yml needs to be placed.

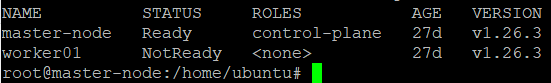

Use the kubectl get nodes command to check your connectivity and verify that all nodes are Ready. The output will look something like this:

Step 4: Perform a Cluster Health Check

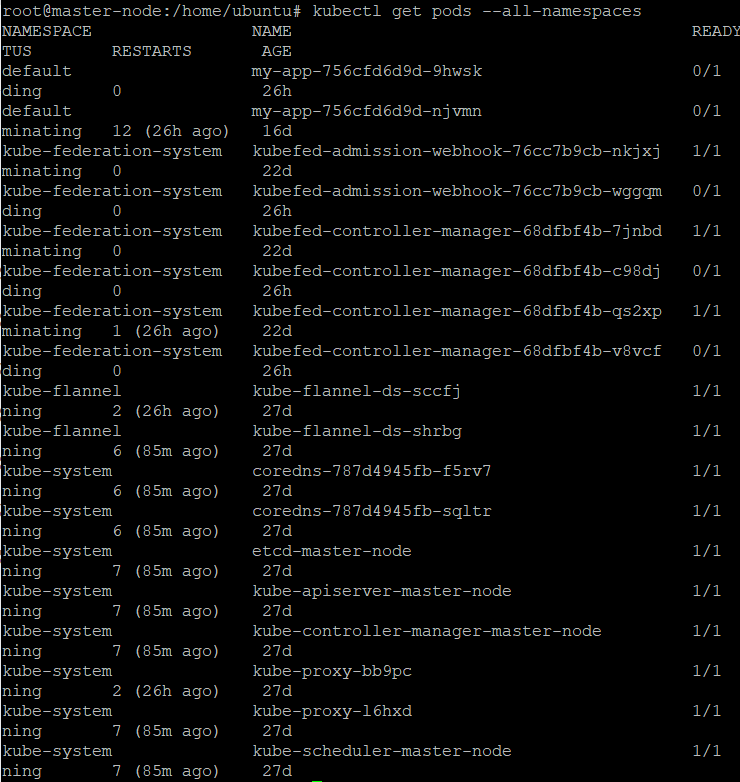

Check that all the necessary containers and pods in the cluster are healthy enough to continue. Use the kubectl get pods --all-namespaces command to view the pods’ status.

Pods should be in a Completed or Running state, with all containers marked as Running under the READY column. The pods with a Completed status should be one-time jobs.

If the running pods are 3/3, and the completed pods are 0/1, this should indicate that the cluster is successfully installed and is ready to run the Rancher server.

All About Kubernetes Rancher with Komodor

Kubernetes environments are renowned for their dynamism and flexibility, but this also makes them incredibly challenging to troubleshoot when incidents arise. The sheer number of metrics, data, and logs to sift through to get a sense of the root cause of an issue can be overwhelming. Even answering simple questions such as “who changed what and when?” can be time-consuming and mentally taxing.

Thankfully, Komodor is a tool that complements Rancher by providing a clear and coherent timeline view of all relevant changes and events in any cluster, along with historical data that makes it easy to draw insights when investigating incidents. With Komodor, you can view pod logs directly in the platform without having to give Kubectl access to every developer.

Furthermore, Komodor monitors every K8s resource and ensures compliance with best practices to prevent issues before they occur. It filters out irrelevant data and presents all relevant information while providing step-by-step instructions for remediation, automating away the manual checks typically required when troubleshooting and operating Kubernetes.

As Kubernetes clusters expand, cloud costs can skyrocket, making it challenging to manage them, particularly with multiple departments, teams, and applications running on different environments or shared clusters. However, Komodor offers centralized visibility, advice, optimization, and monitoring to ensure responsible Kubernetes growth and ideal performance.

To learn more about how Komodor can make it easier to empower you and your teams to troubleshoot and operate K8s, sign up for our free trial.

See Additional Guides on Key Kubernetes Topics

Together with our content partners, we have authored in-depth guides on several other topics that can also be useful as you explore the world of Kubernetes.

Kubernetes Troubleshooting

Authored by Komodor

- Troubleshoot and Fix Kubernetes CrashLoopBackoff Status

- Kubernetes Troubleshooting – The Complete Guide

- What Are Kubernetes Pod Statuses and 4 Ways to Monitor Them

Container Security

Authored by Tigera

- What Is Container Security? 8 Best Practices You Must Know

- Docker Container Monitoring: Options, Challenges & Best Practices

- Top 5 Docker Security Risks and Best Practices

Argo Workflows

Authored by Codefresh