What is ErrImagePull / ImagePullBackOff?

Kubernetes pods sometimes experience issues when trying to pull container images from a container registry. If an error occurs, the pod goes into the ImagePullBackOff state.

When a Kubernetes cluster creates a new deployment, or updates an existing deployment, it typically needs to pull an image. This is done by the kubelet process on each worker node. For the kubelet to successfully pull the images, they need to be accessible from all nodes in the cluster that match the scheduling request.

So what are these errors?

- The

ImagePullBackOfferror occurs when the image path is incorrect, the network fails, or the kubelet does not succeed in authenticating with the container registry. - Kubernetes initially throws the

ErrImagePullerror, and then after retrying a few times, “pulls back” and schedules another download attempt. For each unsuccessful attempt, the delay increases exponentially, up to a maximum of 5 minutes.

This is part of a series of articles about Kubernetes troubleshooting.

How Does Kubernetes Work with Container Images?

A container image includes the binary data of an application and its software dependencies. This executable software bundle can run independently and makes well-defined assumptions about its runtime environment. You can create an application’s container image and push it to a registry before you refer to it in a pod.

A new container image typically has a descriptive name like kube-apiserver or pause. It can also include a registry hostname, such as test.registry.sample/imagename, and a port number, such as test.registry.sample:10553/imagename. Note that when you do not specify a registry hostname, Kubernetes assumes you refer to the Docker public registry.

After naming the image, you can add a tag to identify different versions of the same series of images.

Image Pull Policy

A container’s imagePullPolicy and its tag determine when the kubelet tries to download (pull) the image. You can set various values for an imagePullPolicy, each achieving a different effect. The IfNotPresent value tells the kubelet to pull the image only if it is not present locally, Never tells the kubelet not to try fetching this image, and Always means the kubelet queries the container registry to resolve the name to an image digest when launching a container.

Container image versions

You can ensure a pod always uses the same container image version by specifying the image’s digest. It involves replacing <image-name>:<tag> with <image-name>@<digest>. However, you should be careful when using image tags. If the image registry changes the code that the tag on this image represents, some pods might continue running the old code.

Kubernetes uses an image digest to identify a specific version of a container image. It means Kubernetes runs the same code whenever it starts a container with this image name and digest. You can specify an image by digest to fix the code to ensure a change in the registry does not lead to a mix of versions.

ImagePullBackOff and ErrImagePull Errors: Common Causes

| Cause | Resolution |

|---|---|

| Pod specification provides the wrong repository name | Edit pod specification and provide the correct registry |

| Pod specification provides wrong or unqualified image name | Edit pod specification and provide the correct image name |

| Pod specification provides an invalid tag, or no tag | Edit pod specification and provide the correct tag. If the image does not have a latest tag, you must provide a valid tag |

| Container registry is not accessible | Restore network connection and allow the pod to retry pulling the image |

| The pod does not have permission to access the image | Add a Secret with the appropriate credentials and reference it in the pod specification |

How to Fix ImagePullBackOff and ErrImagePull Errors

As mentioned, an ImagePullBackOff is the result of repeat ErrImagePull errors, meaning the kubelet tried to pull a container image several times and failed. This indicates a persistent problem that needs to be addressed.

Step 1: Gather information

Run kubectl describe pod [name] and save the content to a text file for future reference:

kubectl describe pod [name] /tmp/troubleshooting_describe_pod.txt

Step 2: Examine Events section in describe output

Check the Events section of the describe pod text file, and look for one of the following messages:

Repository ... does not exist or no pull accessManifest ... not foundauthorization failed

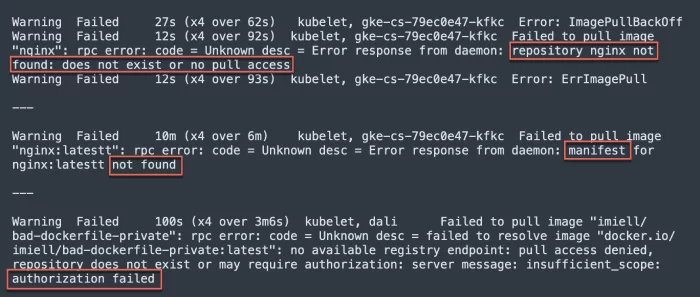

The image below shows examples of how each of these messages appears in the Events output.

Step 3: Troubleshoot and resolve

If the error is Repository ... does not exist or no pull access:

- This means that the repository specified in the pod does not exist in the Docker registry the cluster is using

- By default, images are pulled from Docker Hub, but your cluster may be using one or more private registries

- The error may occur because the pod does not specify the correct repository name, or does not specify the correct fully qualified image name (e.g.

username/imagename)

To resolve it, double check the pod specification and ensure that the repository and image are specified correctly.

If this still doesn’t work, there may be a network issue preventing access to the container registry. Look in the describe pod text file to obtain the hostname of the Kubernetes node. Log into the node and try to download the image manually.

If the error is Manifest ... not found:

- This means that the specific version of the requested image was not found.

- If you specified a tag, this means the tag was incorrect.

To resolve it, double check that the tag in the pod specification is correct, and exists in the repository. Keep in mind that tags in the repo may have changed.

If you did not specify a tag, check if the image has a latest tag. Images that do not have a latest tag will not be returned, if you do not specify a valid tag. In this case, you must specify a valid tag.

If the error is authorization failed:

- The issue is that the container registry, or the specific image you requested, cannot be accessed using the credentials you provided.

To resolve this, create a Secret with the appropriate credentials, and reference the Secret in the pod specification.

If you already have a Secret with credentials, ensure those credentials have permission to access the required image, or grant access in the container repository.

Solving Kubernetes Errors Once and for All With Komodor

Komodor is a Kubernetes troubleshooting platform that turns hours of guesswork into actionable answers in just a few clicks. Using Komodor, you can monitor, alert and troubleshoot ErrImagePull or ImagePullBackoff events.

For each K8s resource, Komodor automatically constructs a coherent view, including the relevant deploys, config changes, dependencies, metrics, and past incidents. Komodor seamlessly integrates and utilizes data from cloud providers, source controls, CI/CD pipelines, monitoring tools, and incident response platforms.

- Discover the root cause automatically with a timeline that tracks all changes in your application and infrastructure.

- Quickly tackle the issue, with easy-to-follow remediation instructions.

- Give your entire team a way to troubleshoot independently without escalating.