Alerts are everywhere: we get them from messaging apps, email, and social accounts – even from streaming services. Sometimes it can feel like we spend a huge amount of time prioritizing these alerts – prioritizing what to read and what to answer. At some point, the feeling of being bombarded by alerts, many often unnecessary or less important, can desensitize you. However, once you suffer from alert fatigue, you might end up missing an urgent alert, and the consequences can be detrimental.

Nothing can be truer for DevOps and developers, who are even more strained by excess alerts – burdened with dozens of IT alerts constantly pouring in from multiple sources on top of the day-to-day alerts they already receive. More and more alerts pile up every year, with the number of software services deployed by firms increasing constantly. Between 2016 to 2019, for example, companies increased their software services by 68% on average, reaching about 120 services per company, according to an analysis by Okta.

Always account for the human factor

Alert fatigue is a symptom of the use of technology, but its origin and its solution actually both belong to the domain of management, organizational development, and psychology.

My startup, Komodor, is a vibrant, fast-growing, young startup with no legacy systems or out-of-date code. Still, there came a point when some of our teams’ coding failures went unnoticed, and ultimately we came close to suffering real damage by ignoring one of the many alerts that we had received. In our case, being so young and working hard to keep our code “clean” meant we also thought that we had calibrated our alerts properly. But if we missed such an important one, then something wasn’t quite right.

Coding failures are a natural part of the development process in every tech company. But an excess of alerts happens because teams don’t calibrate them properly. By making sure that alerts only appear when they should, from the get-go, one can prevent alert fatigue and most of the associated collateral damages.

Can we measure the potential damage?

Alert fatigue can cause enormous damage to companies. To cope with this properly, we must first understand the different contributing factors:

- Ignored alerts that are real (a measurable factor): The most obvious example of collateral damage caused by alert fatigue is when a real alert is ignored, resulting in damage to customers and to your business. An internal retrospective can expose what caused the alert itself. Effective organizations will learn how to prevent these kinds of mistakes in the future.

However, that might not change the dismissal of future alerts. After all, the reason for ignoring the alert from the beginning was, well, alert fatigue; the retrospective, on the other hand, might only have focused on the alerted issue itself. - Lost man-hours (a measurable factor): DevOps engineers’ time is valuable to your organization, because of the critical tasks they fulfill for infrastructure stability and smooth releases and operations. The more time they spend on fixing failures, the less your organization benefits from their talent and experience.

- Employee burnout (can’t be measured): It’s true that ultimately, your engineers might succeed in hacking and resolving most significant alerts. It’s also true that your customers might not always be impacted directly by alert fatigue – that’s true. However, the organization will ultimately still suffer from worn-out employees who handle too many alerts – critical and non-critical, since this will at least lead to degraded productivity.

- Fear of failure (can’t be measured): Psychological effects can go as far as slowing down releases due to DevOps’ fear that one of these alerts is real and can impact customers.

Planning effective alerting

DevOps teams often deal with alert fatigue by way of a mix of actions and procedures. These can include implementing unified alert management systems, increasing the staff that manages alerts, and adopting more proactive and qualitative management of on-call engineers.

However, to cope with alert fatigue, I recommend building a preemptive strategy first and foremost. The strategy should be based on a thorough, yet simple, alert auditing process. Such a process should address mainly cultural and human engineering issues, and be enhanced with simple off-the-shelf steps:

- Get the data: Gather all relevant data such as the total number of alerts, alerts per system, alerts hacked, alerts that were automatically resolved, ignored alerts, alerts discussed, the average time of alert resolution, and the ratio of alert per DevOps/R&D employee (whoever checks alerts in your organization). Put them in a spreadsheet with a column for each type.

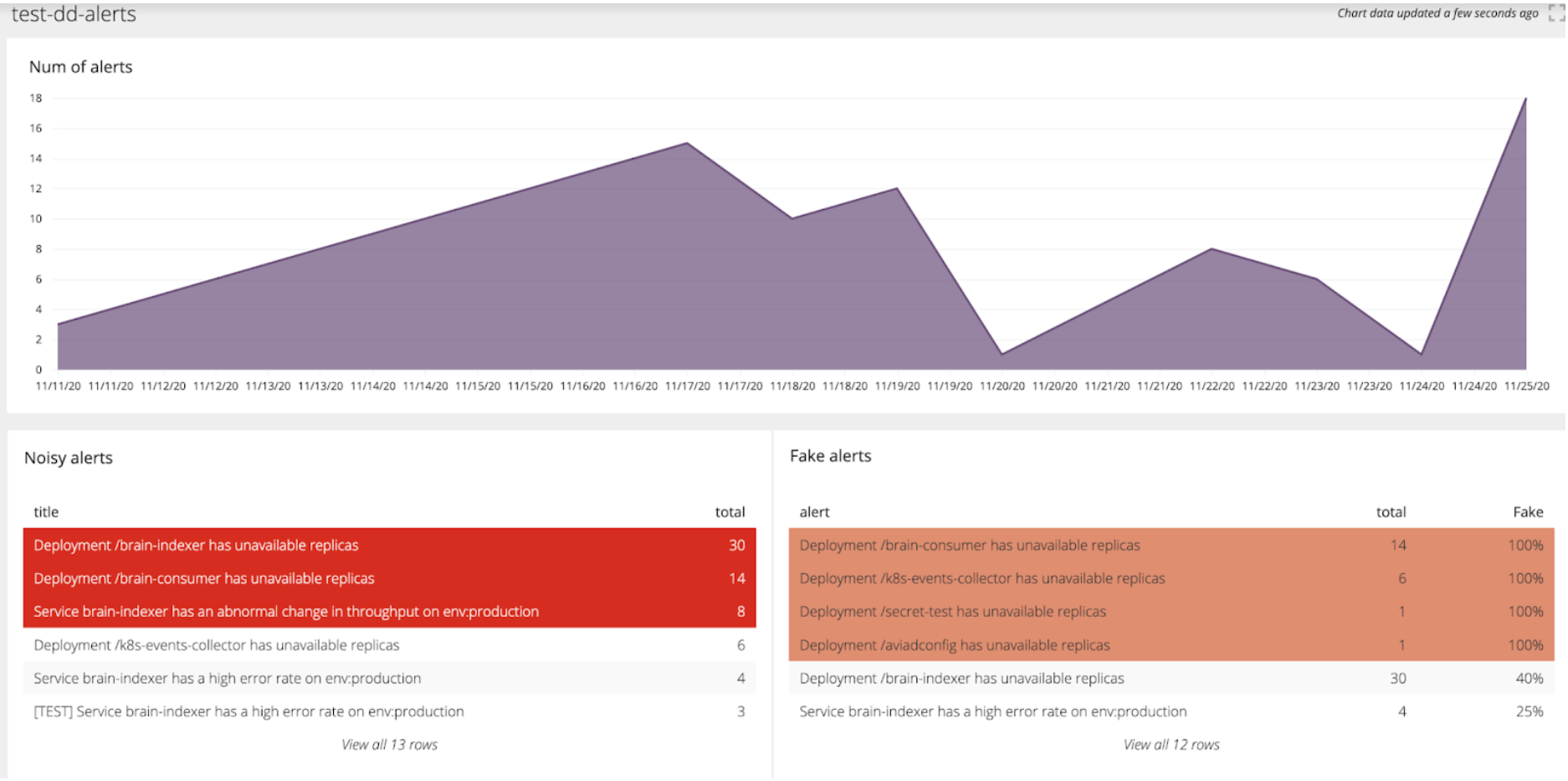

- Visualize the data: Build a script that continuously imports the data into your business intelligence (BI) tool – Chart.io, Tableau, Google Data Studio, or any other. Visualize the data, mark your thresholds, and look for trends. A rising number of total alerts or ignored alerts over time is something that you should pay attention to.

- Define KPIs: After visualizing your data and identifying trends, define KPIs that will help you understand how close you are to the goals you’ve set for your organization – at least the measurable ones. When a KPI is reached, create a new alert to announce that – but make sure this alert is never ignored.

- Create buffers: Reduce fear of failure by creating a safety buffer between the organization and your customers, and track possible code breakdowns. Pick any code and snippet tracking platform to do that, or use Komodor’s end-to-end smart troubleshooting platform.

A visualization of the alerts’ data example

Komodor tracks changes throughout your entire system, correlates and analyzes the complex dependencies of your system, and helps you discover the source of an alert and its effects. Doing this will enable you to kill two birds with one stone: reduce the fear of a meltdown before releasing a new feature, and sort out malfunctions in a calm, controlled environment. Komodor also allows you to estimate time-to-solve issues, thus addressing the lost man-hours issue.

Like I discussed here, most alerts originate from human errors: either the engineers who created a code malfunction or because the alert wasn’t calibrated properly. For these reasons, it’s important to address these problems with better organizational processes. At the same time, it is also important to use technology that can ensure peace of mind and promote confidence by dealing with serious alerts according to a process, alleviating psychological effects of alert fatigue such as burnout and fear of failure.