What is Kubernetes Liveness Probe

Liveness probes are a mechanism provided by Kubernetes which helps determine if applications running within containers are operational. This can help improve resilience and availability for Kubernetes pods.

By default, Kubernetes controllers check if a pod is running, and if not, restart it according to the pod’s restart policy. But in some cases, a pod might be running, even though the application running inside has malfunctioned. Liveness checks can provide more granular information to the kubelet, to help it understand whether applications are functional or not.

What Is The Health Probe Pattern?

Health probes are a concept that can help add resilience to mission-critical applications in Kubernetes, by helping them rapidly recover from failure. The “health probe pattern” is a design principle that defines how applications should report their health to Kubernetes.

The health state reported by the application can include:

- Liveness — whether the application is up and running

- Readiness — whether it is ready to accept requests

By understanding liveness and readiness of pods and containers on an ongoing basis, Kubernetes can make better decisions about load balancing and traffic routing.

How Can You Apply the Health Probe Pattern in Kubernetes?

First, it’s important to understand that you can use Kubernetes without health probes. By default, Kubernetes uses its controllers, such as Deployment, DaemonSet or StatefulSet, to monitor the state of pods on Kubernetes nodes. If a controller identifies that a pod crashed, it automatically tries to restart the pod on an eligible node.

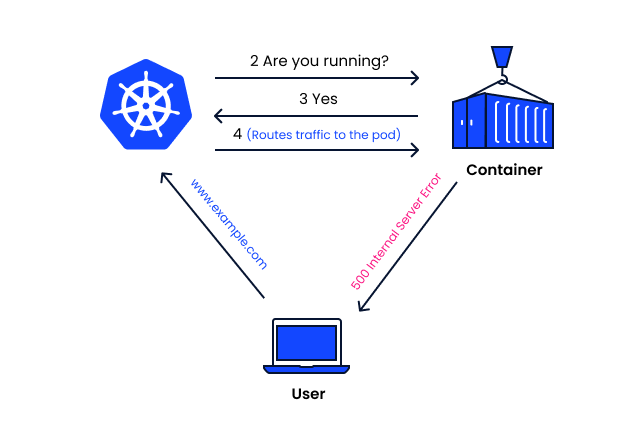

The problem with this default controller behavior is that in some cases, pods may appear to be running, but are not actually working. The following image illustrates this case—a pod is detected by the controller as working. But in reality, this pod hosts a web application, which is returning a server error to the user. So for all intents and purposes, the pod is not working.

To avoid this scenario, you can implement a health probe. The health probe can provide Kubernetes with more granular information about what is happening in the pod. This can help Kubernetes determine that the application is actually not functioning, and the pod should be restarted.

How Kubernetes Probes Work

In Kubernetes, probes are managed by the kubelet. The kubelet performs periodic diagnostics on containers running on the node. In order to support these diagnostics, a container must implement one of the following handlers:

- ExecAction handler—runs a command inside the container, and the diagnostic succeeds if the command completes with status code 0.

- TCPSocketAction handler—attempts a TCP connection to the IP address of the pod on a specific port. The diagnostic succeeds if the port is found to be open.

- HTTPGetAction handler—performs an HTTP GET request, using the IP address of the pod, a specific port, and a specified path. The diagnostic succeeds if the response code returned is between 200-399.

When the kubelet performs a probe on a container, it responds with either Success, if the diagnostic passed, Failure if it failed, or Unknown, if the diagnosis did not complete for some reason.

What are the Three Types of Kubernetes Probes?

You can define three types of probes, each of which has different functionality, and supports different use cases. For all probe types, if the container does not implement one of the three handlers, the result of the probe is always Success.

Liveness Probe

A liveness probe indicates if the container is operating:

- If it succeeds, no action is taken and no events are logged

- If it fails, the kubelet kills the container, and it is restarted in line with the pod

restartPolicy

Readiness Probe

A readiness probe indicates whether the application running on the container is ready to accept requests from clients:

- If it succeeds, services matching the pod continue sending traffic to the pod

- If it fails, the endpoints controller removes the pod from all Kubernetes Services matching the pod

By default, the state of a Readiness probe is Failure.

Startup Probe

A startup probe indicates whether the application running in the container has fully started:

- If it succeeds, other probes start their diagnostics. When a startup probe is defined, other probes do not operate until it succeeds

- If it fails, the kubelet kills the container, and is restarted in line with the pod

restartPolicy

When Should You Use a Liveness Probe?Liveness Probe Best Practices

When Should You Use a Liveness Probe?

A liveness probe is not necessary if the application running on a container is configured to automatically crash the container when a problem or error occurs. In this case, the kubelet will take the appropriate action—it will restart the container based on the pod’s restartPolicy.

You should use a liveness probe if you are not confident that the container will crash on any significant failure. In this case, a liveness probe can give the kubelet more granular information about the application on the container, and whether it can be considered operational.

If you use a liveness probe, make sure to set the restartPolicy to Always or OnFailure.

Liveness Probe Best Practices

The following best practices can help you make effective use of liveness probes. These best practices apply to Kubernetes clusters running version 1.16 and later:

- Prefer to use probes on applications that have unpredictable or fluctuating startup times

- If you use a liveness probe on the same endpoint as a startup probe, set the

failureThresholdof the startup probe higher, to support long startup times - You can use liveness and readiness probes on the same endpoint, but in this case, use the readiness probe to check startup behavior and the liveness probe to determine container health (in other words, downtime)

- Defining probes carefully can improve pod resilience and availability. Before defining a probe, observe the system behavior and average startup times of the pod and its containers

- Be sure to update probe options as an application evolves, or if you make infrastructure changes, such as giving the pod more or less system resources.

Improving Kubernetes Application Availability with Komodor

Probes are one of the most important tools to tell Kubernetes that your application is healthy. Configuring this incorrectly, or having a probe respond inaccurately, can cause incidents to arise. Therefore, without the right tools, troubleshooting the issue can become stressful, ineffective and time-consuming. Some best practices can help minimize the chances of things breaking down, but eventually, something will go wrong – simply because it can.

This is the reason why we created Komodor, a tool that helps dev and ops teams stop wasting their precious time looking for needles in (hay)stacks every time things go wrong.

Acting as a single source of truth (SSOT) for all of your k8s troubleshooting needs, Komodor offers:

- Change intelligence: Every issue is a result of a change. Within seconds we can help you understand exactly who did what and when.

- In-depth visibility: A complete activity timeline, showing all code and config changes, deployments, alerts, code diffs, pod logs and etc. All within one pane of glass with easy drill-down options.

- Insights into service dependencies: An easy way to understand cross-service changes and visualize their ripple effects across your entire system.

- Seamless notifications: Direct integration with your existing communication channels (e.g., Slack) so you’ll have all the information you need, when you need it.